Tokenization - The Trust Gap: Why Institutional Allocators Need Better Tools

.png)

Institutional hesitation toward tokenized assets has many causes, but due diligence complexity stands out — it's the barrier that makes all others harder to overcome. Built for a different era, traditional risk frameworks fall short when applied to tokenized assets. What this market demands is a native approach, purpose-built for the infrastructure institutions actually face.

The Opportunity and the Hesitation

The growth trajectory is hard to ignore. Tokenized assets have expanded from a niche experiment to a market exceeding $17 billion in tokenized private credit alone, up from $4.1 billion in December 2022.

.png)

Institutional market participants are firmly committed to issuing assets in tokenized form and investors are ready to allocate to them. BlackRock's BUIDL fund has accumulated billions in tokenized U.S. Treasuries. Major asset managers like Franklin Templeton, Hamilton Lane or KKR have entered the space. The infrastructure is maturing. Regulatory frameworks are taking shape. And the scope is widening - from treasuries and private credit to bonds, CLOs, equities, and commodities like gold - the range of tokenized asset classes continues to broaden.

And yet, a gap persists between institutional interest and institutional action.

This hesitation is not irrational, nor is it driven by a single cause. The reality is more nuanced and understanding that nuance matters for anyone seeking to accelerate adoption.

A Landscape of Barriers

When we speak with institutional allocators navigating the tokenized asset space, no single concern dominates. Instead, a constellation of barriers emerges:

Credibility concerns: "Is this market mature enough for us? Are the issuers credible? Will this asset class still exist in five years?"

Education gaps: "We don't fully understand the technology. Our investment committee isn't comfortable with blockchain infrastructure."

Regulatory uncertainty: "Does this comply with our jurisdiction's requirements? How do evolving regulations affect our exposure and reporting obligations?"

Liquidity concerns: "Can we exit when we need to? Is there sufficient secondary market depth?"

Access challenges: "How do we even custody this? Which platforms are compliant with our mandates?"

Satisfaction with the status quo: "Our traditional portfolio works fine. Why add complexity for marginal yield improvement, but higher costs?"

Due diligence complexity: "How do we evaluate something that spans smart contracts, blockchain networks, offshore legal structures, and novel custody arrangements?"

Each of these barriers is real. Each deserves attention. And each stands between allocators and the potential advantages tokenization promises - from more efficient settlement and reduced intermediary costs to greater transparency, programmable compliance, and broader liquidity access.

This article focuses on one - due diligence complexity - not because it's the only barrier, but because it has a unique characteristic: it amplifies all the others.

Why a traditional approach to risk falls short

Traditional due diligence frameworks were built for analog assets. Evaluate the issuer's creditworthiness. Review the legal documentation. Assess the underlying collateral. These methodologies have been refined over decades and remain essential for traditional finance

But tokenized assets introduce additional layers of complexity that traditional frameworks weren't designed to address.

Consider what an allocator must now evaluate:

• The Issuer Layer: Who is behind this asset? What is their track record? Are they regulated, and in which jurisdictions? This is familiar territory.

• The Token Layer: How is the asset represented onchain? What smart contracts govern issuance, transfers, and redemptions? Have those contracts been audited? What permissions exist, and who controls them?

• The Blockchain Layer: Which network hosts the asset? What is its security model, validator diversity, and track record of uptime? If the asset is deployed across multiple chains - as many now are - how does risk vary across each deployment?

• The Operational Layer: How does the onchain token connect to the offchain asset? Who are the new counterparties? Who is the custodian? The administrator? The oracle provider feeding price data? What happens if any of these service providers fail?

A single tokenized treasury fund might involve a BVI-regulated issuer, smart contracts on Ethereum and Base, cross-chain messaging via LayerZero, oracle feeds from a third-party provider, custody through a U.S. broker-dealer, and administration by an offshore fund administrator.

Each component introduces dependencies, and each dependency introduces additional risk.

Traditional credit ratings - designed to assess an issuer's ability to meet financial obligations - capture only a part of this picture.

The Expertise Gap

Perhaps most critically, the skill sets required to evaluate tokenized assets span disciplines that rarely overlap. Credit analysts understand financial structures but may lack blockchain expertise. Smart contract auditors understand code but may miss legal or economic red flags. Compliance officers understand regulation but may not grasp the nuances of cross-chain interoperability.

Few institutions have built teams that bridge all of these domains. Fewer still have developed systematic frameworks for integrating these perspectives into a coherent risk assessment.

How Due Diligence Complexity Amplifies Other Barriers

Here's what makes the due diligence gap particularly consequential: it doesn't exist in isolation. It makes every other barrier harder to overcome.

It deepens credibility concerns.

When allocators struggle to verify claims independently, credibility remains abstract. An issuer might assert regulatory compliance, robust custody, and audited smart contracts, but without tools to verify these claims efficiently, allocators must rely on trust. In a nascent market where trust hasn't been fully established, this creates a credibility deficit that even legitimate, well-structured products struggle to overcome.

Better due diligence infrastructure - particularly onchain verification mechanisms like proof-of-reserves - could transform credibility from something asserted to something demonstrated.

It compounds liquidity problems.

Liquidity is, in part, a function of allocator participation. When due diligence is expensive and time-consuming, fewer allocators complete the process. Fewer allocators means less capital deployed. Less capital means thinner markets. Thinner markets mean the liquidity concerns were justified - creating a self-reinforcing cycle.

Fragmentation makes this worse. More than $261 billion in tokenized assets and stablecoins now span over 27 blockchain networks, fragmented across 89+ platforms - each with different bridge paths, custody arrangements, and settlement mechanisms. The result is not one illiquid market, but dozens of disconnected liquidity silos, each too shallow to give institutional allocators the depth they require.

It reinforces first-mover fear.

In conversations with allocators, a recurring theme emerges: no one wants to be first.

When due diligence is difficult, allocators naturally look to peers for validation. If no other credible institution has invested in a particular tokenized asset, the unspoken assumption is that others have found something concerning or that the reputational risk of being wrong alone outweighs the potential return.

This creates a coordination problem. Allocators wait for others to move. Others are waiting for the same signal. Capital that could flow into the market remains on the sidelines - not because the opportunities are poor, but because the tools to confidently evaluate them are lacking.

Standardized, credible due diligence frameworks could break this logjam by giving allocators confidence to act without waiting for peers.

It strengthens the case for the status quo.

For an allocator weighing whether to add tokenized assets to a portfolio, the calculus is straightforward: potential return versus effort and risk. When due diligence requires assembling expertise across legal, technical, and financial domains - often without established frameworks to follow - the effort tilts the equation toward "do nothing."

Traditional assets, by contrast, benefit from decades of standardized evaluation methodologies, established rating agencies, and institutional familiarity. The status quo is simply easier.

Reducing the friction of due diligence doesn't just help allocators evaluate tokenized assets - it changes the cost-benefit calculation that currently favors inaction.

What Better and More Modern Tools Look Like

If current frameworks are inadequate, what would better tools look like? Based on our work with institutional allocators navigating these challenges, several characteristics emerge as essential.

• Real-Time, Continuous Monitoring

Risk assessment cannot be a point-in-time exercise. Allocators need tools that monitor assets continuously - tracking onchain activity, collateral movements, smart contract changes, and market conditions. When something changes, they need to know immediately, not in the next quarterly report.

• Multi-Dimensional Assessment Frameworks

Effective evaluation must span all layers of the stack: issuer, token, blockchain, and operations. A methodology that addresses only credit risk, or only smart contract security, provides an incomplete picture. The most useful frameworks integrate these dimensions into a coherent, comparable risk assessment.

• OnChain Verification

In a trust-minimized environment, the most valuable tools provide onchain verification: proof-of-reserves that can be checked independently, rating data that lives onchain rather than in documents, audit trails that are immutable and transparent.

When credibility can be verified rather than asserted, the credibility barrier begins to dissolve.

• Standardization Across Asset Classes

Allocators managing diversified portfolios need consistent frameworks they can apply across asset types. A tokenized treasury, a tokenized bond, and a tokenized private credit facility should be evaluable using comparable methodologies, enabling portfolio-level risk management.

• Actionable Intelligence

Perhaps most importantly, better tools should enable action - not just inform. Sophisticated allocators aren't looking for more reports to read. They're looking for infrastructure that integrates directly into their processes: alerts when thresholds are breached, data feeds that connect to portfolio systems, risk signals that can trigger predefined responses.

A Barrier Worth Solving

The hesitation among institutional allocators toward tokenized assets is understandable and multi-faceted. No single solution will unlock the market overnight.

But due diligence complexity occupies a unique position among these barriers. It's not just one more obstacle - it's the one that makes all the others harder to clear. And the inverse holds: solving the due dilligence or trust gap doesn't just help allocators evaluate individual assets. It unlocks coordination, credibility, and liquidity the market needs to mature.

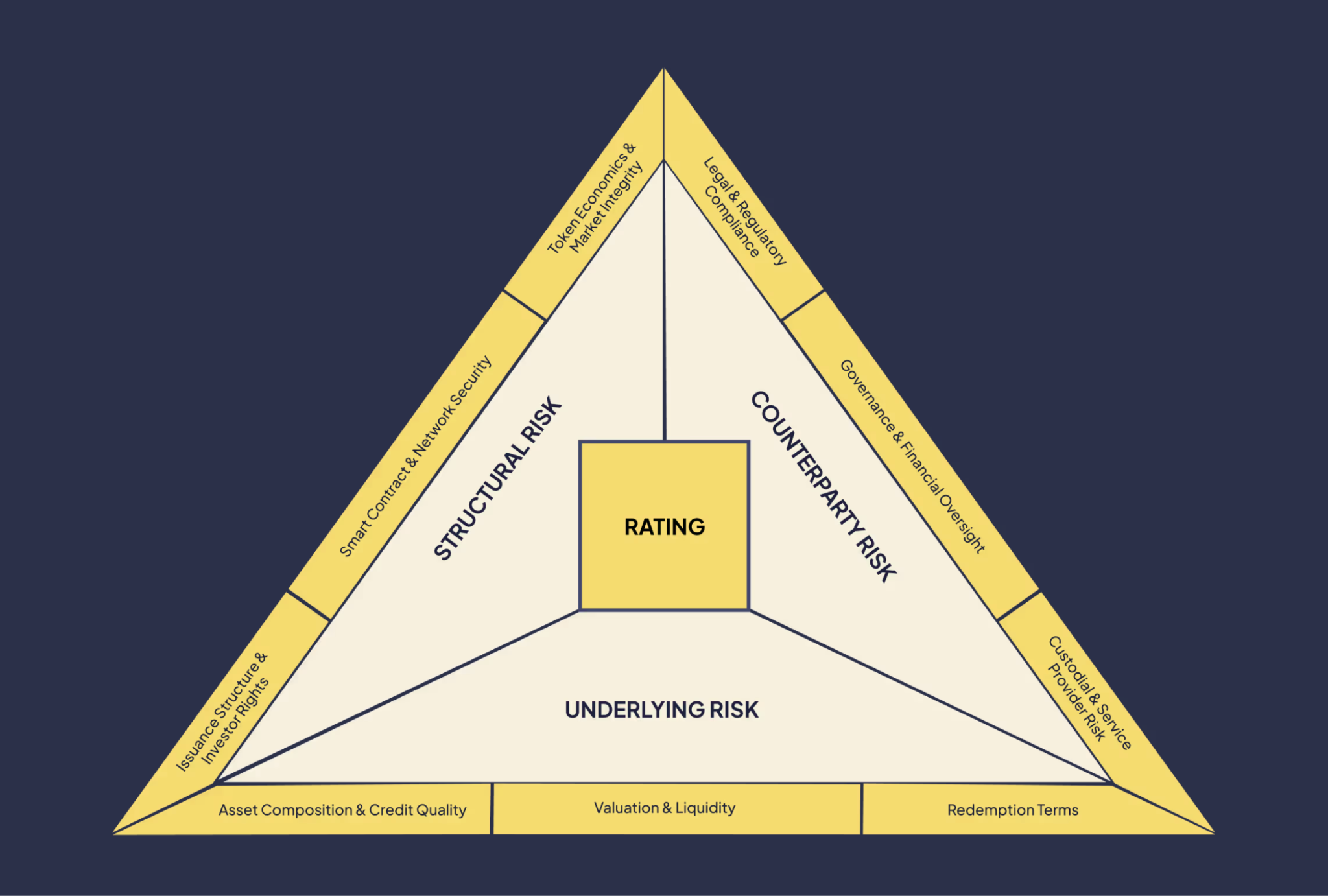

At Particula, we work directly with institutional allocators facing these challenges. Our experience informs both our rating methodology - spanning structural, counterparty and underlying risk, and our conviction that the market needs more than static reports. It needs risk infrastructure that moves at the speed of onchain finance.

The barriers to institutional adoption are real, but they're not permanent. The allocators who build robust evaluation capabilities now will be best positioned as the market matures.

About Particula

Particula is the prime rating provider for digital assets, transforming on- and off-chain data into actionable insights. The company delivers next-generation risk ratings and comprehensive analyses, across issuer and counterparties, issuance structure, technical implementation and underlying risk – providing the clarity and confidence needed to navigate the complexities of digital finance.

Latest News & Insights

Particula Selected To Co-Structure RFP and Provide Independent Ratings for Keel's $500M Regatta Program

Abu Dhabi, UAE – December 11, 2025 – Particula, the leading rating provider for digital assets, today announced its collaboration with Kinetika Research on the 500M Keel Solana Tokenization Regatta.

.svg)